For this problem I worked with a partner and I our first action was understanding the problem. We decided that the problem was that we needed to find some sort of pattern or formula that will tell us how many grid squares a diagonal line will cut through in a given rectangle with m rows and n columns.

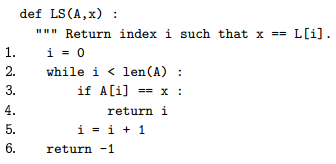

This is what the rectangle looked like with a diagonal through it.

In this rectangle, we have 4 rows and 6 columns. The shaded squares represent the 8 squares that the diagonal line passes through.

So our plan was to at first draw a few diagonal lines starting from the top left corner to a different bottom right corner. We wanted to see what results we would get with different number of rows and columns and see if there were any patterns.

We did this by using the same grid and increased/ decreased the number of rows and columns. We still started drawing the diagonal line from the top left corner, but every time we would choose a different bottom right corner and see how many grid squares the line passes through.

At this point, we came up with a few results, let's call p the number of squares the diagonal line passes through

If m = 4, n = 1, then p = 4

If m = 4, n = 2, then p = 4

If m = 4, n = 3, then p = 6

If m = 4, n = 4, then p = 4

If m = 4, n = 5, then p = 8

If m = 4, n = 6, then p = 8

We noticed that when there are an odd number of columns, the number of squares for that number of columns is equal to the number of squares for the next column amount of columns. But then we were thrown off when m = 4 and n = 4. We thought we were going to get p = 6 as well, but it turns out that when m = n, p = m = n. So we then asked ourselves, "So what does this mean?". Well at first we weren't even sure ourselves.

Currently I am still trying to figure out this problem. I have to admit that I don't actually have the answer to this problem, but it doesn't mean I won't get it. The reason why I'm stuck is because we only tested a couple of cases for when m = 2 and n varying. My partner did other examples with a different number of rows and columns but when we came back to discuss our findings, we were still puzzled. Before I attempt to figure out the solution, I need to test for maybe an odd number of rows or perhaps a larger or smaller number of rows. Right now the amount of information that I have is sort of insufficient.

Before I published this slog, I went to check my partner's slog and surprisingly enough, my partner was able to solve it. Basically what he figured out is that the number of squares that the diagonal line passes through is equal to the number of columns plus the number of rows minus the highest common factor of the both the number of rows and columns.

So in a formula it would be: m + n - HCF(m, n) = p

And it indeed definitely works for the cases that I've tested. Am I 100% this is the correct solution? No but its something right?

Anyways this problem was quite interesting and is very different to what we regularly do in 165.

I also recommend going over to my partner's slog where he goes over what he did to solve this problem: randomcsc165blog.wordpress.com

Oh and if someone actually does know the correct solution and understands it fully, please leave a comment! Perhaps a link to your slog as well.